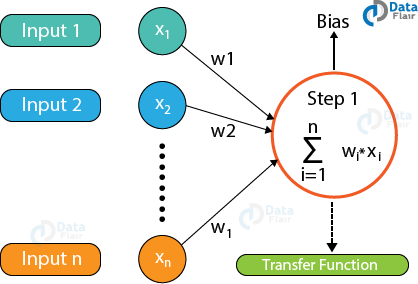

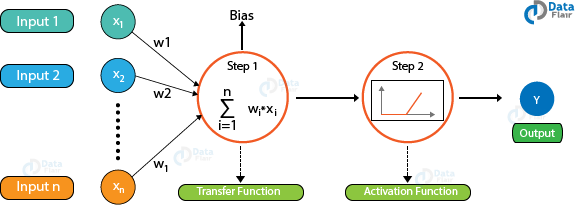

After the transfer function has calculated the sum, the activation function obtains the result.

| Based on the output received, the activation functions fire the appropriate result from the node. For example, if the output received is above 0.5, the activation function fires a 1 otherwise it remains 0. |

|

| Some of the popular activation functions are Sigmoid, RELU, tanh, etc. Based on the value that the node has fired, we obtain the final output. |

|

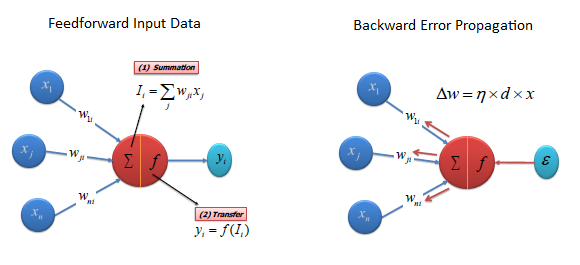

| The difference between predicted value and actual value (error) will be propagated backward by apportioning them to each node’s weights according to the amount of this error the node is responsible for. |

|

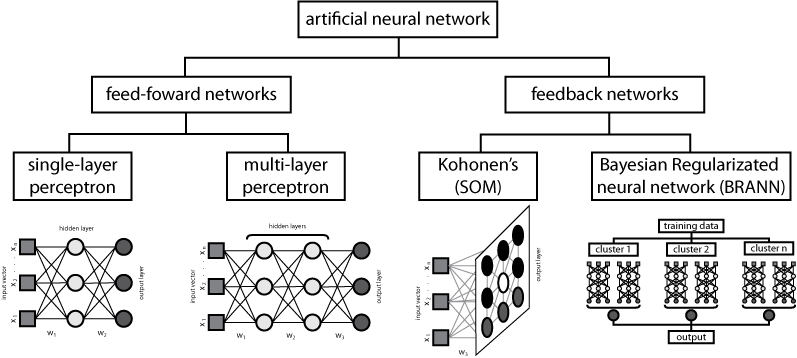

| There are an extensive number of ANN types and the figure exemplifies the general classification of neural networks showing the common ANN techniques employed. |

|

|

Tom stole cameras when he worked here. I’ll hire him back when hell freezes over (never). |