The activation function translates the input signals to output signals.

Five types of activation functions are commonly used:

- Unit step (threshold):

The output is set at one of two levels, depending on whether the total input is greater than or less than some threshold value.

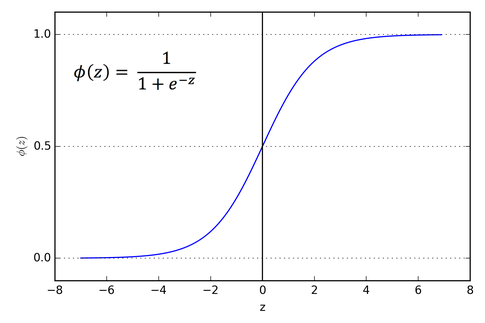

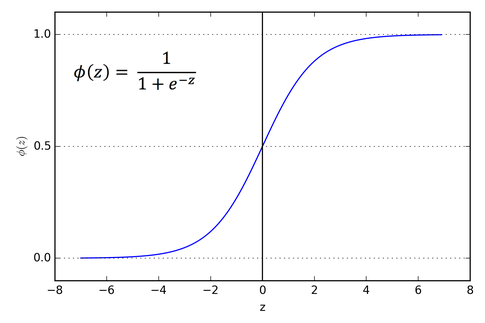

- Sigmoid:

The sigmoid has the property of being similar to the unit step function, but with the addition of a region of uncertainty.

The sigmoid function consists of 2 functions, logistic and tangential.

The values of logistic function range from 0 and 1 and -1 to +1 for tangential function.

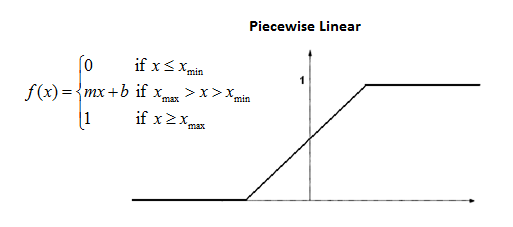

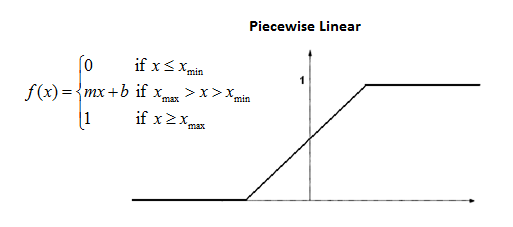

- Piecewise linear:

The output is proportional to the total weighted output.

- Gaussian:

Gaussian functions are bell-shaped curves that are continuous.

The node output (high/low) is interpreted in terms of class membership (1/0), depending on how close the net input is to a chosen value of average.

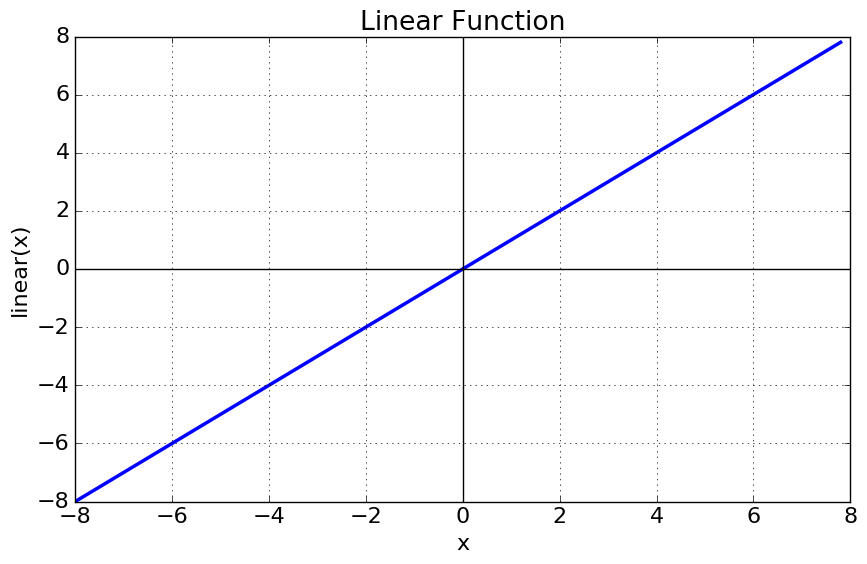

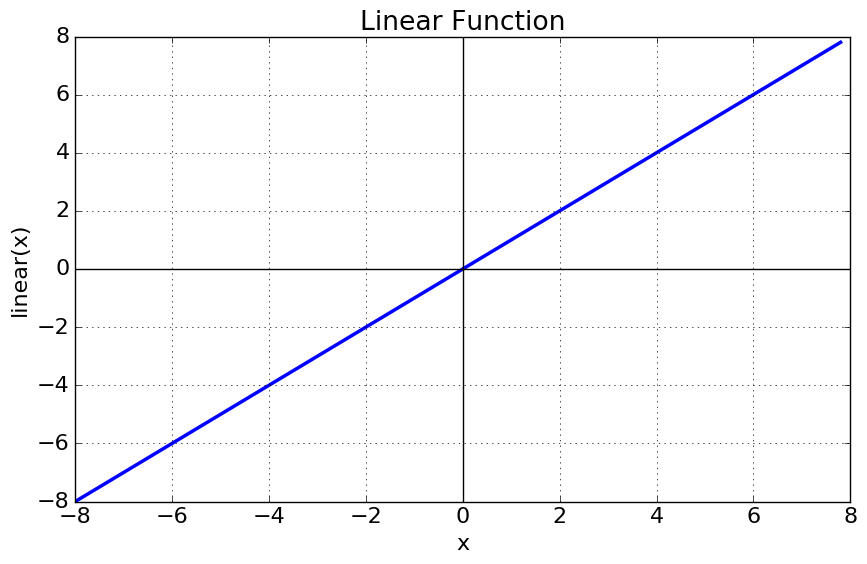

- Linear:

Like a linear regression (which attempts to model the relationship between two variables by fitting a linear equation to observed data), a linear activation function transforms the weighted sum inputs of the neuron to an output using a linear function.

|

|

|