Artificial neural networks are computing systems vaguely inspired by the biological neural networks that constitute animal brains. Such systems “learn” to perform tasks by considering examples, generally without being programmed with task-specific rules. For example, in image recognition, they might learn to identify images that contain dogs by analyzing example images that have been manually labeled as “dog” or “no dog” and using the results to identify dogs in other images.

| They do this without any prior knowledge of dogs, for example, that they have fur, tails, whiskers and dog-like faces. Instead, they automatically generate identifying characteristics from the examples that they process. |

|

The Notion

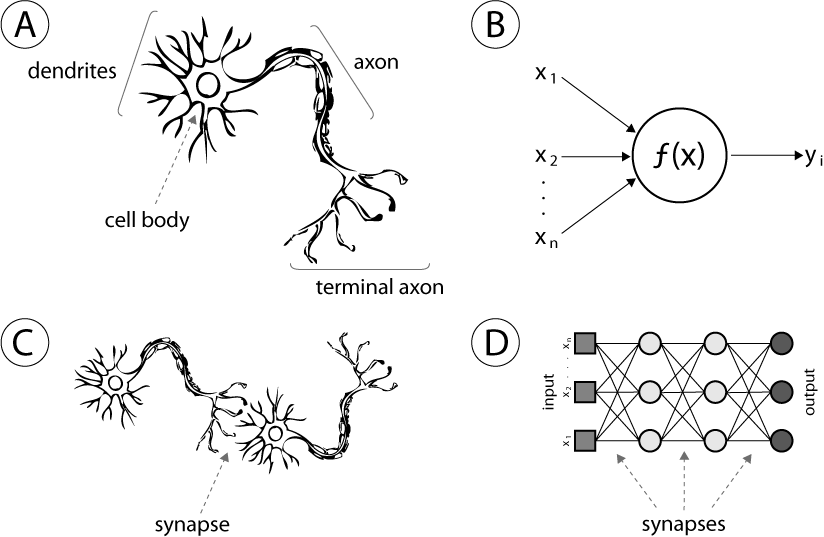

A neural network is an assembly of simple processing units, nodes, or elements that are interconnected and whose functionality is based on the biological neuron. The network’s processing capability stored in the strength of interunit connections (weights) obtained by learning (the process of adoption) from a set of training patterns. Neural network systems perform computational tasks that are much faster than the conventional systems and this is their objective.

| The examples of computational tasks are text to voice translation, zip code recognition, function approximation, and so on. The figure shows (A) human neuron, (B) artificial neuron or hidden unity, (C) biological synapse, and (D) ANN synapses. |

|

|

“Never forget that justice is what love looks like in public.” ― Cornel West |