Step 2. Backward Propagation

Now, we will propagate backwards. This way we will try to reduce the error by changing the values of weights and biases. Considering W5, we will calculate the rate of change of error with respect to change in weight W5.

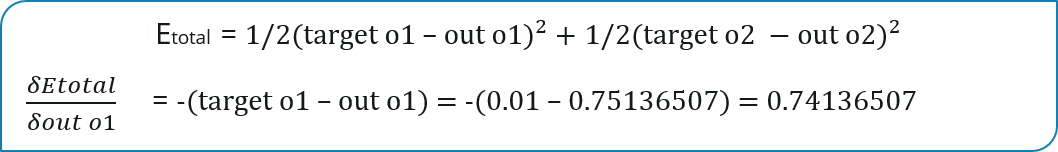

Since we are propagating backwards, first thing we need to do is, calculate the change in total errors with respect to the output O1 and O2.

Now, we will propagate further backwards and calculate the change in output O1 with respect to its total net input.

Let’s see now how much does the total net input of O1 changes with respect to W5?

|

“Prediction is very difficult, especially about the future.” ― Niels Bohr |