Consider the neural network:

|

|

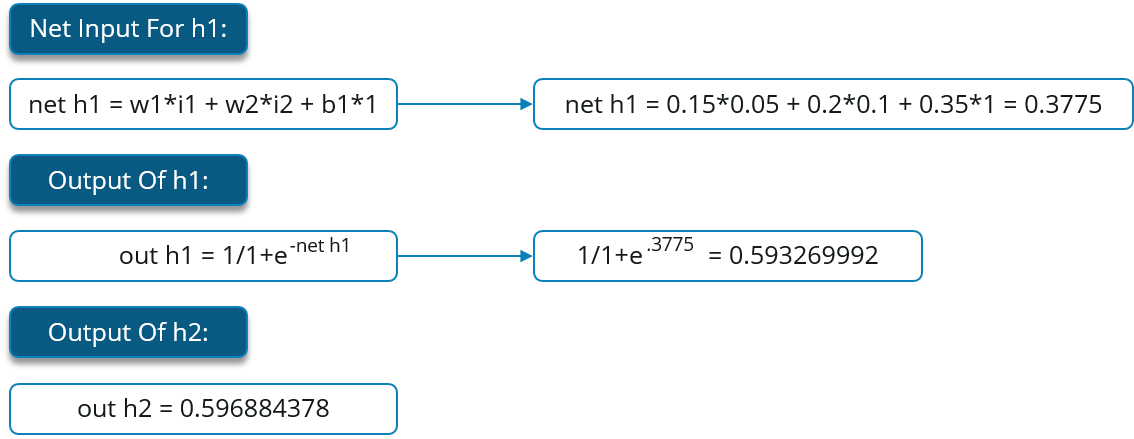

- Forward propagation

- Backward propagation

- Putting all the values together and calculating the updated weight value

We will start by propagating forward.

We will repeat this process for the output layer neurons, using the output from the hidden layer neurons as inputs.

Now, let’s see what is the value of the error:

| The boxer is ready to call time (end) on his long career. |