(Detailed, Second-to-none Comprehensive Specifications)

According to a study, students in computer courses learn much more by building large-scale exercises instead of many small-scale test programs like these, which give fragmented knowledge contrary to solid understanding of the system.Development Requirements

When start developing the exercise, follow the three requirements below:

- The system entry page has to be located at

http://undcemcs02.und.edu/~user.id/525/1/ - All pages have to be hosted by

http://undcemcs02.und.edu/~user.id/ - The systems have to be active even after being graded until the end of this semester. They will be re-checked for plagiarism from time to time.

(Soft) Due Date and Submission Methods

On or before Friday, October 03, 2025. Send an email including

- the “

CREATE” SQL commands and - the password for displaying the source code online (only one password for all exercises and interfaces),

†Note that you are allowed to use any languages and tools for this exercise, but the exams will focus on PHP and MySQL unless otherwise specified.

|

Background and Objectives

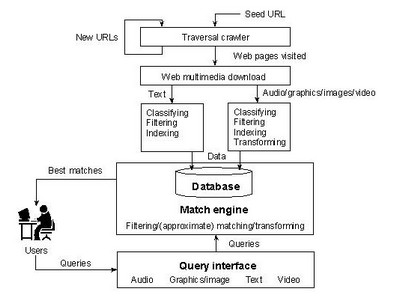

A World Wide Web search engine includes the following three major components:

|

|

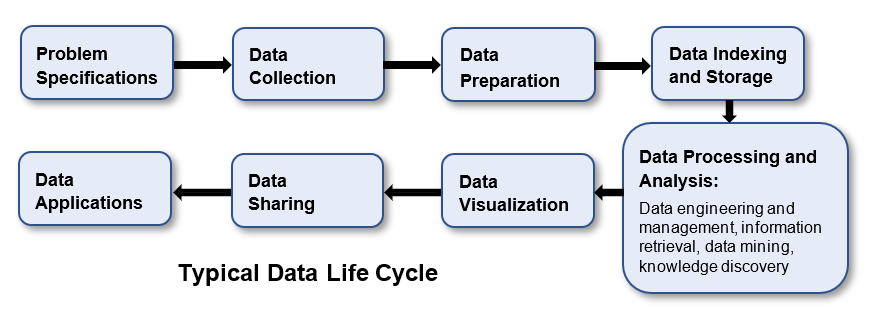

This exercise is for students to learn and practice the phases of data life cycle (including data collection, indexing, storage, search, and ranking) by implementing a focused web search engine.

|

|

The Requirements

The search engine performs three tasks: (i) crawling and data collection, (ii) indexing, and (ii) search and ranking, which include the requirements as follows:

- Starting:

- (System reset: 06%) The system can be reset, which is to clear all data stored in the database and files, so the instructor can test the system by using only his own test data. That is the system has to include a button such as “Clear system” on the system entry page.

- Crawling and data collection:

- (Page number limit: 06%) Users can specify the maximum number of web pages to be indexed. To save database space, definitely no more than 500 pages are indexed. This requirement makes sure everyone has less than the maximum number of indexed pages to search.

- (Web page crawling and collection: 40%)

This requirement is to check how well your crawler works.

You should collect as many pages as possible, but less than the maximum page number.

The more pages you collect, the higher score you will receive.

Note that

- This is a “focused” search engine; i.e., all the indexed pages should be under the seed URL entered by users.

For example, if the seed URL is

“https://www.w3schools.com/xml/,” then you can only index pages with URLs beginning with“ Other pages in the results will not be counted.https://www.w3schools.com/xml/.” - Several seed URLs may be used and pages are collected accumulatively.

- NO multimedia files, such as

.jpgand.wav, need to be saved; i.e., only save the pages that can be indexed (unless you are able to index those multimedia files). - Should not save a page twice.

Breadth- or depth- first tree traversal method is usually used to visit web pages. - This is a “focused” search engine; i.e., all the indexed pages should be under the seed URL entered by users.

For example, if the seed URL is

- Indexing:

- (Indexing: 10%) Use inverted indexes to index the collected data.

- (Removing stopwords: 10%)

Remove stopwords from the page

titleand use the title words for the keywords of the inverted indexes.

- Search and ranking:

- (Searching: 06%) List a page if its title includes any of the case-insensitive keywords in a query, where the keywords are separated by spaces. If the query is empty, list all pages.

- (Ranking: 06%) Rank the search results based on the number of keywords matched.

- Output:

-

(The search results: 10%)

Failing to show the correct, complete indexes will cause misunderstanding and therefore lower your grades.

Each result page includes the following data:

- a number starting from 1 for each page,

- the number of keywords matched,

- the indexed keywords,

- the hyperlinked URL (displaying the page after clicking the hyperlink),

- the page title (from the

titletag) if any, - the page keywords (from the keywords of the

metatag) if any, and - the page description (from the description of the

metatag) if any.

- Checking source code:

-

(Plagiarism-proof: 06%)

It is for the instructor to find any plagiarism.

Each interface includes a button “Display source,” which is to list ALL the source code for implementing the functions of this interface.

Only one password is for all interfaces.

The system will be highly suspected if fail to implement this button.

Use the following PHP script

Check.phpHelpbutton:

~/public_html/course/525/exercise/1/Check.php <?php if ( $_POST['act'] == "Display source" ) { if ( $_POST['password'] == "password" ) { header( "Content-type: text/plain" ); if ( $_POST['interface'] == 1 ) { $file = fopen( "Clear.php", "r" ) or exit( "Unable to open file!" ); while ( !feof( $file ) ) echo fgets( $file ); fclose( $file ); } elseif ( $_POST['interface'] == 2 ) { $file = fopen( "Index.php", "r" ) or exit( "Unable to open file!" ); while ( !feof( $file ) ) echo fgets( $file ); fclose( $file ); echo ( "\n\n\n============================ Spider.php ============================= \n\n\n" ); $file = fopen( "Spider.php", "r" ) or exit( "Unable to open file!" ); while ( !feof( $file ) ) echo fgets( $file ); fclose( $file ); } elseif ( $_POST['interface'] == 3 ) { $file = fopen( "List.php", "r" ) or exit( "Unable to open file!" ); while ( !feof( $file ) ) echo fgets( $file ); fclose( $file ); } else echo "No such interface: " . $_POST['interface']; echo ( "\n\n\n============================== Check.php ============================== \n\n\n" ); $file = fopen( "Check.php", "r" ) or exit( "Unable to open file!" ); while ( !feof( $file ) ) echo fgets( $file ); fclose( $file ); } else { header( "Content-type: text/html" ); echo "<html><body><h3>Wrong password: <em>"; echo $_POST['password']; echo "</em></h3></body></html>"; } } elseif ( $_POST['act'] == "Help" ) { header( "Content-type: text/html" ); $file = fopen( "Help.html", "r" ) or exit( "Unable to open file!" ); while ( !feof( $file ) ) echo fgets( $file ); fclose( $file ); } ?>

Modify the password in the Line 03 to the password you pick.

An Example of System Interfaces

Note that the interfaces are just an example and may not meet the exercise requirements. You should design your own interfaces. The example without using an iframe can be found from here.

Evaluations

The following features will be considered when grading:

- Specifications:

- The instructor (or your assumed client) has given the exercise specifications as detailedly as possible. If you are confused about the specifications, you should ask in advance. Study the specifications very carefully. No excuses for misunderstanding or missing parts of the specifications after grading.

- The specifications are not possible to cover every detail. You are free to implement the issues not mentioned in the specification, but the implementations should make sense. Implemented functions lacking of common sense may cause the instructor to grade your exercise mistakenly, and thus lower your grade.

- The exercise must meet the specifications. However, exercises with functions exceeding the specifications will not receive extra credits.

- Grading:

- This exercise will not be graded if the submission methods are not met. Students take full responsibility if the web site does not work.

- A set of test data will be used by all students. The grades are primarily based on the results of testing. Other factors such as performance, programming styles, algorithms, and data structures will be only considered minimally.

- Before submitting the exercise, test it comprehensively. Absolutely no extra points will be given after grading.

- This exercise will not be graded if the system entry page can not be found at

http://undcemcs02.und.edu/~user.id/525/1/,http-equiv="refresh"http://undcemcs02.und.edu/~user.id/ - The total weight of exercises is 40% of the final grade:

- 20% for the Exercise I (data life cycle) and

- 20% for the Exercise II (data mining and analytics).

- Indexing more than the maximum number of web pages will be penalized and only the maximum number of pages will be examined.

- Process running longer than five minutes will be aborted by the instructor.

- If not specified, no error checking is required; i.e., you may assume the input is always correct for that case. For example, the maximum page number entered will always be a positive integer ≤ 500.

- Feel free to design your own interfaces; user-friendliness will be heavily considered; each function/button will be tested extensively; and from the source code submitted, the programs will be examined.

- The newest Firefox browser will be used to grade exercises. Note that Chrome, Edge, and Firefox are not compatible. That is your exercises may work on the IE or Chrome but not Firefox.

- The instructor will inform you the exercise evaluations by emails after grading.

- Databases:

- A database has to be used and try to perform the tasks by using SQL as much as possible because SQL, a non-procedural language, can save you a great deal of programming efforts.

- The SQL DDL commands such as “

CREATE” have to be submitted, where SQL is Structured Query Language and DDL is Data Definition Language. - From the source code submitted, the database design and programs will be examined. Poor database design or uses will result in a lower grade.

- (-05%) if the database design is NOT optimal.

- (-05%) if the SQL

CREATEcommands of database implementation is NOT submitted. - There are many advantages of using databases. If database is not used, the problems caused by not-using-transaction must be considered. For example, if two users are enrolled at the same time, an ID may be assigned to different customers if databases are not used.

- Comments:

- Make it work first and then try to extend it.

- Time management is critical for software development. If you are not able to complete the exercise, display whatever you have accomplished, so the instructor can give partial credit to your exercise.

- One way to build a complex web system from scratch is to design the user interfaces first and then implement the system button by button.

By doing this way, it could simplify the construction.

The recommended construction steps are

- Studying the specifications very carefully,

- Designing the databases (E-R modeling or normalization),

- Implementing the databases (SQL),

- Building the interfaces (HTML, CSS, and JavaScript),

- Implementing the system button by button (PHP), and

- Testing the exercise thoroughly.

- Several languages such as HTML, CSS, Unix shell, Perl, PHP, and Java are used in class demonstrations. A good programmer is not limited by specific languages. Use the languages when they are the most appropriate for the need; for example, Perl is good at string processing, PHP is designed for web processing, and shell scripts are powerful.